Australia’s world-first ban on social media for children under 16 takes effect in just over a month on December 10, 2025, yet nobody knows exactly how it will work.

The Online Safety Amendment (Social Media Minimum Age) Act 2024 passed parliament in a rushed process in November last year, imposing potential fines of up to $50 million on platforms that fail to keep out underage users. While 77% of Australians support the ban, only 25% believe it will actually work, and with weeks until launch, the mounting controversies, technical failures, and expert warnings suggest it’s becoming exactly the shitshow sceptics predicted.

The eSafety Commissioner Julie Inman Grant admitted the government is “building the plane while flying it.” Over 140 experts signed an open letter calling it “too blunt an instrument.” Privacy advocates describe it as creating the most invasive online measures in Australian history. Children, the supposed beneficiaries, weren’t meaningfully consulted at all. And, while I rarely advocate for listening to tech companies, they warn it’s “extremely difficult” to enforce.

The legislative process itself became the first major controversy. The government introduced the bill on November 21, 2024, and allowed just 24 hours for public submissions, an extraordinary constraint for legislation affecting millions of Australians. The Senate committee received 15,000 submissions in one day but had only four days to review them before the bill passed both houses on November 28-29, 2024. They didn’t even bother presenting most of the submissions.

USYD researchers called this “an outrageous abuse of process, and one that is not conducive to making evidence-based, informed decisions in a democracy.” The Australian Psychological Society refused to submit a response at all, stating the bill’s “far-reaching implications for child development, mental health, and society” made it impossible to properly assess in a single day. Even within the government, tensions emerged about granting excessive power to the eSafety Commission.

The rushed timeline meant the law passed before the government’s own age verification technology trial was complete. The Age Assurance Technology Trial, which tested 60+ technologies from 48 vendors, didn’t release its final report until two months ago, on August 31, 2025 (nine months after the law passed). This cart before the horse approach left platforms scrambling to implement a law without knowing what methods would be deemed compliant.

The age verification requirement creates a piece of backdoor surveillance infrastructure that affects every Australian, not just children. Elon Musk labeled it “a backdoor way to control access to the Internet by all Australians,” a rare instance where his fevered ranting and hyperbole aligns with expert consensus.

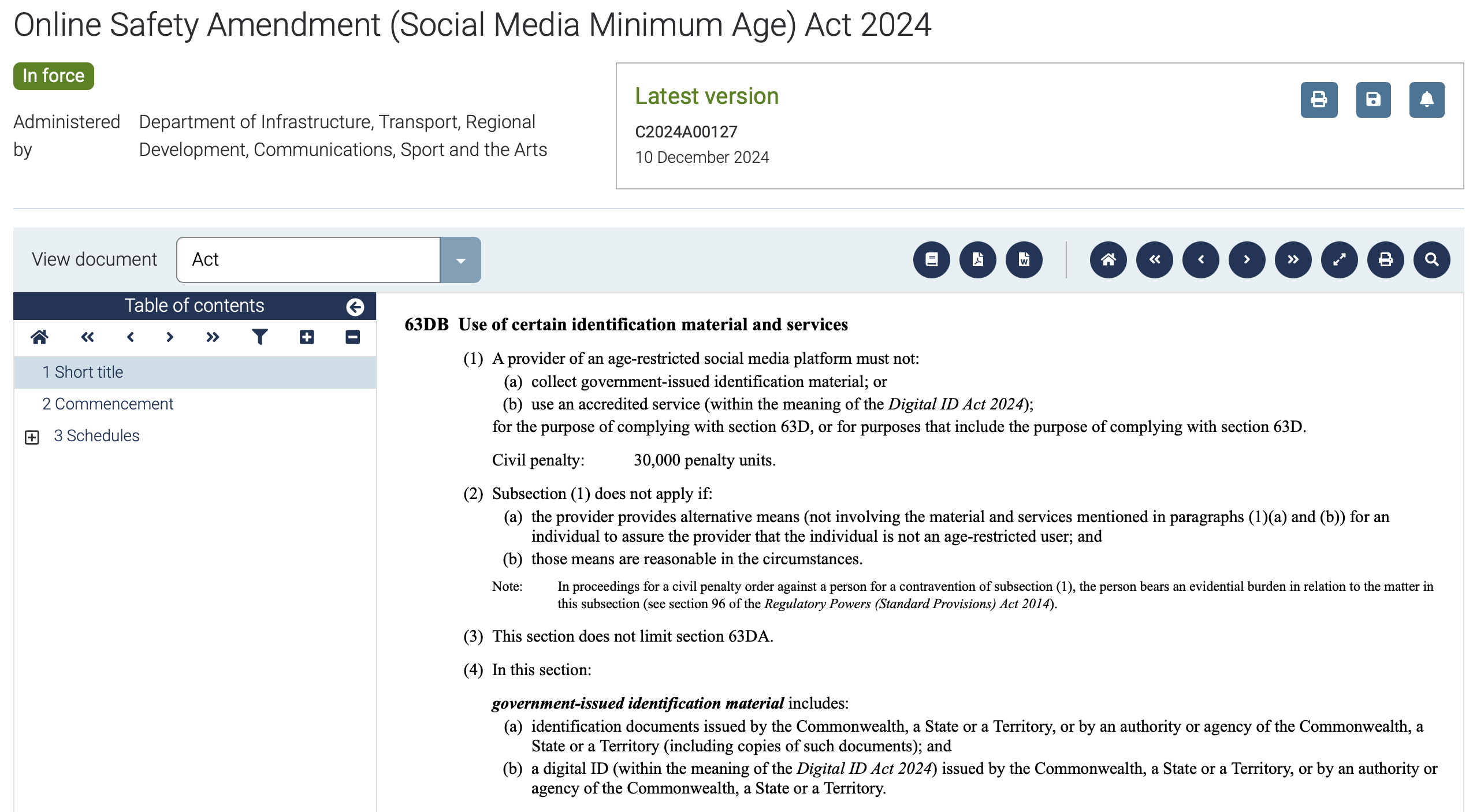

The paradox is built into the law itself: s 63DB prohibits platforms from directly collecting government-issued IDs or requiring Digital ID systems, yet platforms must verify ages. This forces reliance on unproven technologies like facial biometric scanning, behavioural tracking, and AI age estimation, all of which require invasive data collection. Welcome to the age of internet phrenology!

The eSafety Commissioner acknowledged platforms would use a “waterfall approach” involving “layered safety measures” that, privacy experts note, often relies on collecting increasing amounts of personal data at multiple steps of user interaction.

The Australian Human Rights Commission warned that “all Australians may be required to prove their identity in order to access social media” and that “this may potentially require all Australians to provide social media companies with sensitive identity information, which poses a risk to our privacy rights in light of recent examples of data breaches.” Meta was fined €251 million in 2024 for inadequate data protection, yet now must build age verification systems handling biometric data for millions. Discord has already compromised the identity of 68,000 Australians.

Digital Rights Watch emphasised that “most forms of age verification require the provision of additional personal information” and that “incentivising companies and government agencies to collect, use and store additional personal information in order to conduct age verification creates additional privacy and security risk, which in-turn can exacerbate online harms.”

The government’s own 2023 assessment concluded the “age assurance market is, at this time, immature”, yet proceeded with legislation anyway. The trial found “concerning evidence” that some providers were “over-anticipating eventual needs of regulators” by building excessive data retention capabilities for law enforcement access, creating what cybersecurity experts warn could be a honey pot for hackers.

The age assurance trial concluded age assurance is technically feasible, but with major caveats that undermine confidence in the system. Trial lead Tony Allen stated accuracy would be “90-99%, not 100%” and warned: “People are in this mindset that if any child can get onto social media, the whole system is a failure. Quite frankly, those people are in a dream world.”

That dream world comment is an understatement. The trial’s own technical annexes reveal its positive headline findings are a masterclass in misdirection. The report only achieves “effectiveness” for facial age estimation by implementing “buffer thresholds” that systematically block legally eligible users. The trial’s data tables show that at the 13+ gate, 13-year-olds are correctly identified only 76.43% of the time, and 14-year-olds only 89.66% (both failing a 95% accuracy target). To clear the 13+ gate with over 95% accuracy, the trial’s own data shows a user would need to be 16. That isn’t age assurance, it’s the mass, arbitrary exclusion of eligible teenagers. What fun!

The report’s other conclusions are just as hollow. It claims “no substantial technological limitations” for ID-based Age Verification, yet admits in its own technical annex that “several providers lacked the ability to check documents against live government databases” to see if an ID was lost or stolen.

Google testified to a Senate committee in October 2025 that the law is “extremely difficult to enforce” and warned it “will not make children safer online.” Meta expressed concern about “numerous challenges” and “significant new engineering and age assurance challenges.” TikTok called the legislation “rushed” and warned it “risked pushing younger users to darker corners of the internet.” Even the Digital Industry Group (representing all major platforms) stated: “No one can confidently explain how it will work in practice—the community and platforms are in the dark.”

The three main verification methods all have critical flaws:

Biometric age estimation using facial scanning achieves 90-99% accuracy in lab conditions, bn the trials have found inconsistent performance across skin tones and genders. A mean absolute error of 1.3-1.5 years means 16-year-olds could be classified as 14 or 18. Voice biometrics, described as less mature technology, struggles with accents, language fluency, and disabilities.

Document verification requiring ID uploads is the most reliable but prohibited by the law unless alternative methods are offered. It also excludes populations less likely to possess government IDs and raises massive data breach concerns.

Behavioural inference analysing account patterns, school schedules, and content preferences is easily gamed and unproven at scale. The trial concluded “no single ubiquitous solution works for all contexts” and suggests that coordination between different services will be required, but no unified or coordinating infrastructure exists.

The trial identified the most scalable circumvention methods as AI-generated spoofing and VPNs. When the UK introduced similar laws in July 2025, VPN usage spiked immediately. The eSafety Commissioner acknowledges platforms must prevent under-16s from using VPNs to pretend to be outside Australia, but there are no proven methods to consistently, reliably detect VPN usage without extreme surveillance that would violate privacy rights.

Even a VPN provider noted: “We are sceptical that VPNs will play a significant role in the long term. Only reliance on parental control apps and education can provide sustainable outcomes”. It’s pretty astounding that even a VPN company will acknowledge their product will facilitate circumvention while profiting from the law’s failure, but also note it’s riddled with problems.

YouTube, which was initially exempt due to educational value (Google claims that 84% of Australian teachers use it monthly), became a major flashpoint that exposed the policy’s inconsistencies when it was suddenly added to the ban on July 30, 2025, after eSafety Commissioner (and someone who cookers might claim is a CIA asset) Julie Inman Grant recommended its inclusion. The decision came after a survey showing 37% of Australian children ages 10-17 reported seeing harmful content on YouTube.

Google threatened to sue the Australian government over the inclusion, warning the decision could be challenged on grounds it restricts the implied constitutional freedom of political communication and violated procedural fairness. Communications Minister Anika Wells responded defiantly, stating that Australia “will not be intimidated by legal threats when this is a genuine fight for the well-being of Australian kids.” Which, you know, is a good line to draw, because we shouldn’t trust Google. But also, in this case, Google’s probably right.

The reversal highlighted arbitrary line-drawing. The Wiggles successfully lobbied to keep YouTube initially exempt. Gaming platforms like Roblox remain in limbo, with the eSafety Commissioner warning the ban could extend to them despite gaming being technically exempt. Snapchat argues it’s primarily a messaging platform like WhatsApp and should be exempt, noting 75% of time on Snapchat in Australia is spent messaging. The government clearly disagreed.

With just weeks until implementation, platforms still don’t know which services are covered or what verification methods will be deemed compliant. Minister Wells described the rules as “working rules” rather than a fixed solution, an extraordinary admission for a law about to take effect.

The most damning criticism comes from mental health professionals and youth advocates who warn the ban will harm the very children it aims to protect. Christopher Stone, Executive Director of Suicide Prevention Australia, stated: “Social media provides vital connections for many young Australians, allowing them to access mental health resources, peer support networks, and a sense of community. Cutting off this access risks exacerbating feelings of loneliness and isolation. The government is running blindfolded into a brick wall by rushing this legislation.”

A mental health service director revealed that 73% of young people across Australia accessing mental health support did so through social media. ReachOut Organisation and headspace (National Youth Mental Health Foundation) expressed serious concerns about cutting off these lifelines.

LGBTQIA+ youth face particular risk. Senator David Shoebridge warned: “This policy will hurt vulnerable young people the most, especially in regional communities and especially the LGBTQI community, by cutting them off.” ANU found young non-binary people who regularly use social media reported higher levels of life satisfaction compared to the general population… connections they’ll lose under the ban. Professor Daniel Angus from QUT noted: “Children who are disadvantaged, live in rural areas or are from minority groups rely on social media for community, education and advocacy. By imposing a blanket ban, the government overlooks these essential and diverse experiences, thereby exacerbating existing inequalities.” A 14-year-old refugee in Darwin pointed out the ban could sever her only link to her grandmother overseas.

Over 140 Australian and international experts signed an open letter calling the ban “too blunt an instrument to address risks effectively.” The Australian Human Rights Commission stated: “On balance, the Australian Human Rights Commission does not believe that a blanket ban on social media for under-16s is the right response.” Amnesty International Australia warned: “A ban that furthers isolation, and obliterates connections and community, will not meet the government’s objectives of improving young people’s lives.”

Young people were consciously absent in consultation. Leo Puglisi, 17-year-old founder of youth news outlet 6 News Australia, criticised the lack of consultation with affected young people and noted: “This is a government that is obviously not made up of teenagers, but it’s making law about social media when they didn’t grow up in the social media age.”

With the December deadline approaching, major platforms are racing to implement systems they don’t believe will work. Meta plans to remove hundreds of thousands of users under 16 and will reach out to confirmed underage account holders offering a choice: delete data immediately or allow Meta to store it until they turn 16. The company will use a waterfall approach with AI age estimation, behavioural tracking, and tiered verification based on risk factors.

TikTok faces removing approximately 200,000 under-16 users in Australia. The platform stated: “Where we identify someone who is saying they’re 25 but whose behaviours would indicate they’re below the age of 16, we will have those accounts deactivated.” Snapchat must remove around 440,000 under-16 users while maintaining its argument that it shouldn’t be classified as social media at all.

YouTube users under 16 can still view content without accounts but cannot comment, upload, or maintain personalised recommendations, essentially reverting to 2005-era YouTube. The platform warns this makes children “less safe” as parental controls and safety features won’t engage. They’re probably correct.

No platform has publicly disclosed its specific age verification system with weeks until enforcement begins. The regulatory guidance released September 16, 2025 remains “principles-based” rather than prescriptive, requiring platforms to “define acceptable error thresholds” themselves based on risk profiles. There’s no mandatory minimum accuracy threshold so the government is effectively outsourcing policy design to the companies it’s regulating.

The eSafety Commissioner will begin enforcement on December 10, but stated she expects informal engagement first before enforcement action. Given the technical uncertainties and untested systems, the first months of implementation will be chaotic at best.

Australia’s law represents the world’s strictest national social media age restriction, stricter than China’s gaming time limits for minors. Multiple countries including Norway, the UK, and several U.S. states are watching closely, with Prime Minister Anthony Albanese likely to present the model at international forums. European Commission President Ursula von der Leyen called Australia’s decision “plain common sense.”

But the law could strain Australia’s relationship with key ally the United States. Elon Musk’s vocal criticism and reported warnings from U.S. Vice President JD Vance about potential retaliatory tariffs create diplomatic tension. The U.S. is Australia’s fourth-largest export market. Reuters noted the ban “could strain Australia’s relationship with key ally” at a time when, for better or worse (mostly worse), U.S. tech companies dominate global digital infrastructure.

The precedent could embolden authoritarian regimes to justify internet censorship under the guise of child protection, which is exactly what human rights advocates warned about when Australia passed its “misinformation” bill, which Musk also opposed (a broken clock, etc.)

Australian polling reveals the core tension: 77% support the ban, but only 25% think it will actually work, versus 67% who think it won’t achieve its aims. Parents want something to be done, but don’t believe this will do it. The government is delivering political theatre rather than effective policy.

The law places “full responsibility” on platforms (not parents or children) but provides no penalties for underage users who circumvent restrictions (not that that’s a good idea either). It prohibits collecting the documents that verify age while requiring age verification. It aims to protect privacy while mandating mass surveillance infrastructure. It seeks to keep children safe online by pushing them to unregulated spaces or teaching them to use VPNs and circumvention tools.

Professor Daniel Angus of QUT called it “illogical and uninformed,” stating “the potential harms of social media the government cited are not sufficiently supported by high-quality research.” Human Rights Law Centre senior lawyer David Mejia-Canales argued: “Instead of piecemeal age restrictions, we need an overarching duty of care that ensures digital platforms prioritise the safety, privacy, and wellbeing of all their users, not just the youngest.”

The alternative approaches, including but not limited to safety by design, duty of care obligations, algorithmic transparency, digital literacy education were all recommended by the government’s own Parliamentary Select Committee. These recommendations were ignored in favour of a “simple”, politically popular ban that won’t work and will cause significant harm.

Australia is about to conduct an unprecedented experiment in internet regulation with all its citizens as test subjects. The implementation of the world’s first comprehensive social media age ban arrives with fundamental questions unanswered: Which technologies will platforms use? How will privacy be protected? What happens to vulnerable youth who lose vital support networks? How will anyone stop VPN circumvention? Will this push children to less regulated platforms?

The rushed legislative process, dismissal of expert consensus, lack of youth consultation, unproven technology, mounting privacy concerns, and looming implementation deadline with unclear compliance methods all point toward a policy disaster in the making. Whether measured by technical feasibility, human rights impacts, privacy protection, or likely effectiveness at actually protecting children, the law fails on nearly every metric experts use to evaluate internet regulation.

Communications Minister Anika Wells inadvertently captured the situation perfectly: these are “working rules” not a fixed solution, meaning the government has legislated a framework without knowing if it will work, hoping to figure it out as platforms and users encounter the inevitable problems. For a law affecting millions of Australians and setting global precedent building the plane as we’re flying it is not reassuring. It’s an admission that Australia is about to crash-test its own citizens’ digital rights and children’s wellbeing in pursuit of a policy that polls show most Australians think won’t succeed.

The mandatory review in 2027 will likely document what experts already predict: widespread circumvention via VPNs, harm to vulnerable youth populations, massive privacy costs for minimal safety gains, and children pushed to less regulated corners of the internet. The question is whether the government will admit the failure or double down with even more invasive enforcement mechanisms. Based on the rushed, evidence-free process that created this law, the latter seems more likely.

Cover Image from Pleasing stories for good children: with pictures from the UCLA Children’s Book Collection. Phrenology image from Vaught’s Practical Character Reader. Fools image from 18th-century chapbook entitled The World Turned Upside Down or The Folly of Man, Exemplified in Twelve Comical Relations upon Uncommon Subjects.